Does this site still do reviews? I can't remember when the last one was even posted.

I like that three tier system, Yoda. I don't like the numerical scores on games, and this looks like a great alternative.

I like really precise percentages or bland out of 5 star systems.

What about 1ups new system I dont mind that. Anything above a C sounds good.

The problem with 5 pont gaming scores like the one 1up uses is that there essentialy they are 20% "wide" So and A could be anything from 80% to 100%.

My preference (not for this site) is what Play does, a scoreless review wit a 10 word or less summary at the end, so if you are in a rush you can read the short synopsis, but otherwise you read the reveiw.

For scoring on this site I like your suggestion Yoda, or the half-increments 0 through 10.

If your sites purpose is just to to give a general overview on the quality of the game then sure something like above would work. But I dont care for it cause I want precise scoring. And I know everyone brings up the argument of "whats the difference between an 85 and an 84" oh shut up, the difference is that its a higher number thats it. A larger scale allows for more nuance with the rating.

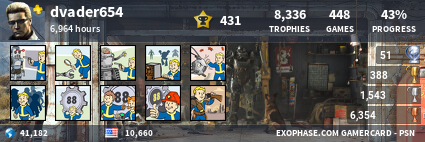

One of the site's forefathers.

Play fighting games!

Play fighting games!

Dvader said:If your sites purpose is just to to give a general overview on the quality of the game then sure something like above would work. But I dont care for it cause I want precise scoring. And I know everyone brings up the argument of "whats the difference between an 85 and an 84" oh shut up, the difference is that its a higher number thats it. A larger scale allows for more nuance with the rating.

For me there is a difference between a 90% game and a 94% game.

Anything over 90% is great, but when it comes to greatness there is great and there is GREAT. If you know what I mean. And I like to know that distinction.

One more thing I'd like to add. I think there's a lot of value in really emphasizing genre of game as much as what system it's on. Perhaps even more. Most reviews don't even mention genre or if they do it's just a little note somwhere at the beginning or end of the review. But ultimately that's what people compare games on. People don't argue which game is better Halo or Viva Pinata because both of them are 360 games. They compare Halo and Killzone and Pinata to. . . well whatever crap you compare that to. The Sims??

A party game that scores a 9 may not have the same value as a shooter or platformer that scores an 8. Likewise a $5 downloadable WiiWare or XBLA game shouldn't be held to the same standards of review as a $50 - $60 console game. Stuff like that tends to be forgotten or not even noticed when a review is just quickly glanced at.

Is It Time To Review Reviews?

No matter how much we might disagree with them, reviews are important. They tell us about games, they give opinions on how good they are compared to others, and help us work out what to spend our money on.

Back in the old days, magazine reviews were pretty much all we had to go on, hanging off every word of the 4 or 5 articles about a game.

But now, the internet means that there are so many reviews, reviewers and review sites, that “review aggregating” sites such as Metacritic or Gamasutra are needed in order to get an overall opinion.

The problem, is that they do the complete opposite they don’t give an overall opinion, instead they provide weight to the haters and hide actual reviewers opinions in a mask of homogeneity.

The solution – a new form of aggregation formula, similar to that used by Rotten Tomatoes for movies.

When I started playing video games (about 28 years ago) game development reviews were something that everyone read – they were how you found out about games, and how you judged which games you’d buy.

When I started in game development (about 17 years ago) reviews were still vitally important – they boosted your ego, and your CV, they still swayed your purchases and sometimes even affected the end of project bonuses.

Reviews mattered, and were taken seriously, in part because there weren’t that many of them. In both of the above cases the amount of reviews you’d get was limited – depending on the platform and the territory maybe there’d be 4 or 5 magazines that would cover your game – and the only one(s) you’d be really interested were the ones that the publisher would love to plaster quotes from on the box (“this game is awesome (5 stars)” Official [InsertConsoleName] Magazine etc.)

Now however things have changed, at least in some regards.

Reviews are still the subjective opinions of people we (generally) don’t know. Review scores are still used by many of as an essential guide to the quality of a game.

But the rise of the internet and the demise of print have seen the number of review sites increase by orders of magnitude. So much so that it’s no longer enough to have a few great reviews for your game – you now have to have enough so that the AVERAGE review is great … enter the era of GameRankings, MetaCritic et. al.

These aggregation sites are practically essential in navigating the vast quantity of reviews for titles – so much so that game development contracts now specify GameRanking (or Metacritic) rating as bonus/contractual criteria.

Unfortunately, these aggregation sites have a huge flaw - Metacritic / Gamerankings are unfairly swung by bad reviews. If your game is averaging 80% it takes two “excellent” 90% reviews to make up for one “not my sort of game” 60% review. It takes four 90% reviews to make up for one “hater” 40% review – that’s tough – particularly as bad reviews can easily be given by people who don’t like that sort of game.

These effects can, if you’re unlucky, be magnified further as many sites end up just duplicating the content of reviews from the main sites, making the aggregate even more arbitrary. While the system of review “weighting” used by some aggregators (based on the status of the reviewing site) is aimed to solve some of these problems, it instead only exacerbates them further, should just one of those high-status sites happen to be your token “hater”.

Now I don’t want to give the impression that “hater” reviews are bad – I believe there should not be any homogeneity in reviewing. Rather, instead reviews should be biased towards the opinions of the reviewer - that’s why we read them.

BUT – those opinions only count when you actually READ the review, not when you just look at the score, which is all you get from the aggregator.

A score alone does not take in to account the preferences of the reviewer – can you tell that the 40% that a game received in that one review (which dragged down the overall average) was because the reviewer was a hardcore shooter fan, who really just didn’t want to review that racing-sim? Or because that horror-death game was reviewed by an extreme-moralist?

In general reviews provide a percentage which is supposed to allow the public to judge which is better Game A or Game B, but can you really compare a racer to a shooter to a puzzle game to a pony-sim?

Games can be dragged down by single elements that many would say “don’t really matter”. A game released on PS3 that has graphics that look like a PS2 game will get marked down for that, even if it’s crazy fun, maybe only by 10% or so, but enough to push it out of the tiny “top” percentage. This forces a block-buster mentality, whereby the only way to get good reviews is to spend more than the last game did, whereas what we should be doing is saying “are we having fun”.

Review percentage is also based on a NOW comparison, games are compared to the quality of other releases, so it might be fair to compare Blur with Split/Second, but how do you compare either of them to Ridge Racer, or older titles? If a so-called classic game got reviewed now it would be marked down accordingly, just take a look at some of the straight ports of arcade classics on Xbox live arcade. Those games rocked in their time, now they languish with 50-70% review scores.

So, in summary, there are too many reviews to read them all, so we have to aggregate.

But aggregation just gives us an average score. Not an aggregate opinion.

Given that, in our industry, a 70% score is regarded as mediocre, at best, an aggregate scoring system unfairly biases towards “hater” reviewers. What we need is a completely different approach to reviews, one that allows for crazy bias, one that allows for opinion.

Thankfully the movie industry has already worked out such a review process: Rotten Tomatoes. Rather than aggregate percentages, it simply gives a +1 if it’s a “Favourable” review, and a -1 if it’s a “Negative review”. Films are then scored based on whether most reviewers liked it or didn’t. So you get an opinion based aggregation that focuses on entertainment value and not arbitrary quality thresholds. And that’s all win.

The answer is to use the most commonly occuring score, not averages.

I've always been an advocate of precise numerics. While certainly highly subjective, split into categories it lends further insight into what worked and what didn't by more of a degree than an explanation alone. I also see it as a counter-balance to a review's tone, which may be harsher on a hyped game that only turned out good than a game of similar quality that was surprising.

That said, there are values to simplification and avoidance of shoehorning a number where it doesn't belong. This is where I actually think the real discussion should be, as opposed to precise score vs. vague score vs. no score, but rather, when simplifying, what is the best method?

I'm looking into providing an alternate review system for the site, but supplementary to what already exists -- the best of both worlds. But what should be the second world? I feel I have the answer, but feedback would be good.

People have a preconceived connotation to a score, and it's not the same for everyone. This applies to 1-10 ratings, 4 or 5 star ratings etc. Cutting it down from the 100 scale to avoid the 89 vs 90 problem, I don't think it really aids one to merely go to a star rating, because now you've created a whole new set of issues.

What I envision is a 3 tier system that forgoes numeric value altogether:

Yes Maybe No

I think that ought to cover it.

---

Tell me to get back to rewriting this site so it's not horrible on mobile