It's black.

IBM on Wii U's CPUIBM Microprocessors to Power the New Wii U System from Nintendo

ARMONK, N.Y., June 7, 2011 /PRNewswire/ -- IBM (NYSE: IBM) today announced that it will provide the microprocessors that will serve as the heart of the new Wii U™ system from Nintendo. Unveiled today at the E3 trade show, Nintendo plans for its new console to hit store shelves in 2012.

The all-new, Power-based microprocessor will pack some of IBM's most advanced technology into an energy-saving silicon package that will power Nintendo's brand new entertainment experience for consumers worldwide. IBM's unique embedded DRAM, for example, is capable of feeding the multi-core processor large chunks of data to make for a smooth entertainment experience.

IBM plans to produce millions of chips for Nintendo featuring IBM Silicon on Insulator (SOI) technology at 45 nanometers (45 billionths of a meter). The custom-designed chips will be made at IBM's state-of-the-art 300mm semiconductor development and manufacturing facility in East Fishkill, N.Y.

The relationship between IBM and Nintendo dates to May 1999, when IBM was selected to design and manufacture the central microprocessor for the Nintendo GameCube™ system. Since 2006, IBM has shipped more than 90 million chips for Nintendo Wii systems.

"IBM has been a terrific partner for many years. We truly value IBM's commitment to support Nintendo in delivering an entirely new kind of gaming and entertainment experience for consumers around the world," said Genyo Takeda, Senior Managing Director, Integrated Research and Development, at Nintendo Co., Ltd.

"We're very proud to have delivered to Nintendo consistent technology advancements for three generations of entertainment consoles," said Elmer Corbin, director, IBM's custom chip business. "Our relationship with Nintendo underscores our unique position in the industry -- how we work together with clients to help them leverage IBM technology, intellectual property and research to drive innovation into their own core products."

Built on the open, scalable Power Architecture base, IBM custom processors exploit the performance and power advantages of proven silicon-on-insulator (SOI) technology. The inherent advantages of the technology make it a superior choice for performance-driven applications that demand exceptional, power-efficient processing capability – from entertainment consoles to supercomputers.

Also we have to remember that it has a different architecture, for instance I read this on GAF

"the WiiU has a I/O processor, GPGPU and a DSP. But this does bring up a question. Now we keep hearing that the WiiU's CPU is weak, but is it possible that it is just being programmed for incorrectly? By this I mean, the games being ported to the WiiU are made for consoles that have in-order CPUs, older GPU's that are not GPGPU's and no DSP. Couldn't that, theoretically, be holding down the WiiU's CPU with unnecessary processes and making it slower than it should be?"

"It's a combination of the Wii U bein new hardware and not optimized yet, and the fact that the Wii U CPU is an out of order CPU instead of an in order one like the Xenos and Cell. The Wii U instead relies on its GPGPU to handle most computing for games. Basically, this means the Wii U CPU is about on par with the PS360 and it's only good enough to run current gen engines, but next gen games will look great and play great on the Wii U because the PS4/720 are rumored to be switching to OoO CPU's as well and using GPGPU's.

Out of order CPUs execute randomly and can handle unpredictable things like AI and how splayed reacts where in order CPU's tend to b better at more predictable streamlined things like physics."

General-purpose computing on graphics processing units (GPGPU, GPGP or less often GP²U) is the means of using a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU).[1][2][3] Any GPU providing a functionally complete set of operations performed on arbitrary bits can compute any computable value. Additionally, the use of multiple graphics cards in one computer, or large numbers of graphics chips, further parallelizes the already parallel nature of graphics processing.[4]

OpenCL is the currently dominant open general-purpose GPU computing language. The dominant proprietary framework is Nvidia's CUDA.

So from what I have personally read as a non-tech guy I'm coming to understand the nuances of Wii U hardware and why it may not be performing massively great with the ports.

For a start the SDK for E3 was shite, Team Ninja so much as said so when they explained why their E3 version of NG3 looked so dodgy. They said that it had improved lots since then and you can see the difference between something like ZombiU at E3 compared to Gamescon.

With the actual Wii U hardware it seems to have some notable differences to current hardware.

CPU

It has an out of order CPU compared to the in order CPUs of 360 and PS3. They use different programming languages for a start. Out of order is more flexible and is what is expected to be adopted for PS4 and Nextbox. The programming language is Open CL?

GPU

Wii U has a General Purpose GPU (GPGPU) which is what the industry is moving towards, a graphics processing unit that does things that traditionally are handled by the CPU. So if devs are porting 360 engines and putting stuff on the CPU when it's not supossed to be doing that, they will bog down the CPU where it shouldn't be.

DSP

The Wii U has a Digital Sound Processor, why is this important? Some 360 games used up chunks of the 360 CPU just processing sound, having a DSP chip means the CPU can be freed up for other things.

I/O PROCESSOR

The Wii U has an I/O Processor, this one is tricky as the explanations I have read are not totally clear to me. Basically I think it means that instructions are carried out far more clearly and efficiently between the components of the system

COMPUTE SHADERS

Wii U is said to have compute shaders, features better known to you as DX11 features which is fairly state of the art stuff. Whenever you see modern PC versions of console games with significant graphics enchancements they are using compute shaders. Crysis 3 on PC is using DX11, Star Wars 1313 is using it etc.

Wii U is said to have an in built tesselation unit which improves the level of detail in the environment. From what I can tell it intelligently bumps up detail or models depending on where you are or what you are looking at in an environment. You can see it in action in Crysis 3

MEMORY

Wii U has 1.5 to 2GB of RAM and Nintendo usually pack in superfast high quality RAM, this means it has 3 to 4 times as much RAM as 360 and PS3. Practically this means higher resolution textures, less compression, potentially larger environments or longer draw distances.

DISC FORMAT

Wii U has 25gb propriety discs. But PS3 has Bluray, I hear you say. Yes, but it's disc drive was perilously slow and it doesn't have the RAM to display the higher data, more detailed resolution textures that you can pack onto a larger capacity disc. Wii U's disc drive will almost certainly have a higher read rate and with triple to quadruple the RAM and the higher IQ (image quality) enabled by 3 times as much eDRAM as 360 it technically should be able to do these textures justice.

EDRAM

360 has 10mb of edram, Wii U has 32mb which means it should have better anti-aliasing among other things. To quote GAF:

"Its very good, and a nice surprise.To put it another way, it would be unlikely any other next gen system would have much more than 32mb eDRAM, and considering the Wii U won't be on par with those systems, it bodes well for IQ (image quality) being markedly improved over what average gamers are used to with the PS3 and 360. The 360 did have 10mb of eDRAM (which was why blu made mention of the 10mb) but indications are the Wii U's won't simply be newer and have 3x as much, but that it might be more synergistic with the GPU than the 360's was. It will be able to support "free" AA, for example, to improve IQ, but it depends on whether developers bother to do it or not. The Wii U will be capable of it though."

So it looks like PS4 and Nextbox will have out of order CPUs and GPGPUs too which bodes well for Wii U ports, but it isn't helping current gen programming at the moment if devs are using 360 engines on Wii U to build games.

Ideaman the guy who has been telling us accurate stuff about Wii U for months now, presumably from Ubisoft sources has said this yesterday about Wii U

This is a bunch of posts all together in one quote, note that the bolding is his editing, not mine

Ideaman said:on a broader subject than sound, a little golden nugget: the level of overall improvement some developers managed to reach for their projects between my Spring posts and now is awesome, huge, bigger than what i expected and said back in those days.

HYPE !Can't be too specific. But let's say the framerate is one of the way to benchmark the "performance" of a game running on a dev kit. They managed to increase the framerate of their projects by a lot since my Spring posts. It's thanks to many parameters, between new dev kits, sdk revisions, improvement of their engines, etc. All while enhancing the image quality, the effects.

It's a positive news and as Iwata said, it confirms (if it was needed) that the Wii U, as every other new system, takes time to master for developers. They definitely have a better grasp on the machine than a few months ago, and this greater familiarization is seen through this leap on performances i've just touched upon.Well, all i can say is explained in my posts: some already announced and presented games on Wii U, saw their framerate greatly improved during their development, since my Spring posts. It shows that developers got a better grasp on the system, on the tools to make their titles on it, etc. And yep, this cool news comes with improvement in the visual department also (more effects, and implementation of anti-aliasing, etc.).

(Question from GAF member) What would you define as greatly improved e.g. Going from 30 fps -> 60 fps. Going from a choppy 30 (or 60 fps) to stable 30 (or 60) fps?

Well, i used bold and underline. So it's great great, not meh great. It's not just 5 fps more. Don't know however if this kind of improvement has been witnessed across the board, for other games, but it involves at least a few ones.

Foolz said:What are the specs on the duct tape?

The sticky-ness is set to about 3.2 ghz/sec.

edgecrusher said:The sticky-ness is set to about 3.2 ghz/sec.

Well, IBMs Watson twitter feed said it based on the Power 7 chips, 45 nm SOI process whatever that means is confirmed. 3ghz to 4.25 ghz is the range of the Power 7 chip but the wii u cpu is custom and so could be lower, doubt it would be higher.

The POWER7 is a multi-core processor, available with 4, 6, or 8 cores. There is also a special TurboCore mode that can turn off half of the cores from an eight-core processor, but those 4 cores have access to all the memory controllers and L3 cache at increased clock speeds. This makes each core's performance higher which is important for workloads which require the fastest sequential performance at the cost of reduced parallel performance. TurboCore mode can reduce "software costs in half for those applications that are licensed per core, while increasing per core performance from that software."[10] The new IBM Power 780 scalable, high-end servers featuring the new TurboCore workload optimizing mode and delivering up to double performance per core of POWER6 based systems.[10]

Each core is capable of four-way simultaneous multithreading (SMT). The POWER7 has approximately 1.2 billion transistors and is 567 mm2 large fabricated on a 45 nm process. A notable difference from POWER6 is that the POWER7 executes instructions out-of-order instead of in-order. Despite the decrease in maximum frequency compared to POWER6 (4.25 GHz vs 5.0 GHz), each core has higher performance than the POWER6, while having up to 4 times the number of cores.

POWER7 has these specifications:[11][12]

45 nm SOI process, 567 mm2

1.2 billion transistors

3.0 – 4.25 GHz clock speed

max 4 chips per quad-chip module

4, 6 or 8 cores per chip

4 SMT threads per core (available in AIX 6.1 TL05 (releases in April 2010) and above)

12 execution units per core:

2 fixed-point units

2 load/store units

4 double-precision floating-point units

1 vector unit supporting VSX

1 decimal floating-point unit

1 branch unit

1 condition register unit

32+32 kB L1 instruction and data cache (per core)[13]

256 kB L2 Cache (per core)

4 MB L3 cache per core with maximum up to 32MB supported. The cache is implemented in eDRAM, which does not require as many transistors per cell as a standard SRAM[5] so it allows for a larger cache while using the same area as SRAM.

"Each POWER7 processor core implements aggressive out-of-order (OoO) instruction execution to drive high efficiency in the use of available execution paths. The POWER7 processor has an Instruction Sequence Unit that is capable of dispatching up to six instructions per cycle to a set of queues. Up to eight instructions per cycle can be issued to the Instruction Execution units. The POWER7 processor has a set of twelve execution units as [described above]"[14]

This gives the following theoretical performance figures (based on a 4.14 GHz 8 core implementation):

max 33.12 GFLOPS per core

max 264.96 GFLOPS per chip

gamingeek said:max 33.12 GFLOPS per core

max 264.96 GFLOPS per chip

This is key.

edgecrusher said:This is key.

I don't even know what that means.

gamingeek said:I don't even know what that means.

Me either. But its key.

I found this interesting, clock speeds are really misleading:

A notable difference from POWER6 is that the POWER7 executes instructions out-of-order instead of in-order. Despite the decrease in maximum frequency compared to POWER6 (4.25 GHz vs 5.0 GHz), each core has higher performance than the POWER6, while having up to 4 times the number of cores.

No^

Eurogamer: How Powerful is the Wii U Really?

Crucially, developers Eurogamer spoke to as part of a wide-ranging investigation into the innards of the Wii U now have final specifications.

The CPU: The Wii U's IBM-made CPU is made up of three Power PC cores. We've been unable to ascertain the clock speed of the CPU (more on this later), but we know out of order execution is supported.

RAM in the final retail unit: 1GB of RAM is available to games.

GPU: The Wii U's graphics processing unit is a custom AMD 7 series GPU. Clock speed and pipelines were not disclosed, but we do know it supports DirectX 10 and shader 4 type features. We also know that eDRAM is embedded in the GPU custom chip in a similar way to the Wii.More at the link

They have only listed RAM "availiable to games" we know there is more RAM than 1gb, but we have heard that the Operating System takes up a chunk. GPU contradicts what the leaked specs said about compute shaders which is more like shader model 5, but they do say that pipeline details have not been disclosed. The leaked specs were the inital aimed for specs though. Seems we know more about the eDRAM than Eurogamer does, it's 32mb compared to 360's 10mb.

Ah I see:

"People do realise that Shader model 5.0 is just microsofts version in HLSL and that OpenGL (which Wii U uses) has the same features but they use in GLSL 4.3 right?"

Also Ideaman has explained that there is more than 1GB of RAM

Devs were told to use 1GB as the target initially as a huge chunk has to be used for the OS, then Nintendo said they were going to up it more. So it's more likely to be what we expected 1.5-2GB RAM.

First, the positives. Where the Wii U outdoes the current generation is in RAM and GPU. The Wii U has, effectively, twice the RAM available to games that the Xbox 360 and the PS3 each have. And we hear much praise of the GPU.

"The Wii U is a nice console to work with because it's got so much RAM in comparison [to the PS3 and Xbox 360]," another Wii U developer, who wished to remain anonymous, told us. "For E3 we simply dumped the whole game into memory and never once used the disc after the content was loaded from it."

It is the RAM in combination with the GPU that means Wii U games have the potential to outshine Xbox 360 and PS3 games. Indeed, according to one source, the Wii U version of his company's game will be "the smoothest console version".

"We're a GPU-heavy game," the source continued. "Wii U has a powerful GPU with more oomph than the rivals - and is more modern in architecture and shader support, which may come in handy later on.

"The CPU on the other hand is a different question. We are not limited by it but some other games might suffer from it. Still, because of the GPU, I expect most multi-platform games to look the best on Wii U, even if the difference might not be huge sometimes."The mention of the Wii U's CPU raised our eyebrow - as it has done for many developers we've spoken to. While its clock speed remains private, most developers agree it is lower than the clock speed offered by the PS3 and Xbox 360's CPUs - disappointing for many.

Looks like the CPU rumours were true but they are not mentioning how the General Purpose GPU, I/O and DSP chip offloads work from the CPU. Well they kind of say it, the GPGPU is so good they still expect the Wii U games to look better. The DSP support was only unlocked a couple of months back, so before then they were running audio on the CPU.

Assuming Microsoft and Sony launch their next-generation consoles late next year, the Wii U will enjoy 12 months as (potentially) the most powerful console on the market. But the development community expects big things from the Xbox 720 and PS4 - power leaps that make costly investments worthwhile.

Then, the Wii U will lag significantly behind in the graphics stakes. At least, that's what we're being told. The next Xbox, we understand, allows developers to use the DX11 graphics standard to easily shift code from PC to the console. The Wii U is capable only of DX10 (one of the reasons you won't see an Unreal Engine 4 game on it). And let's not forget that processor.

Ruh roh

One developer working on a Wii U launch game told us he was able to optimise his game's CPU main core usage by up to 15 per cent throughout the course of development, and further optimisations are expected before the game comes out.

Remember what Ideaman said:

Ideaman said:on a broader subject than sound, a little golden nugget: the level of overall improvement some developers managed to reach for their projects between my Spring posts and now is awesome, huge, bigger than what i expected and said back in those days.

HYPE !

Alot of people on different sites are now questioning the "DX10" comment in the Eurogamer article. Apparently it does not match up to the R700 series GPU which Wii U is based on.

Also, the system itself does not run on any kind of DX equivalent as it's not a PC or a Microsoft product.

Smelly Said:

The Wii U is capable only of DX10

Holy fuck! Eurogamer has just announced a MAJOR scoop! The wii-u runs on microsofts DirectX, and not openGL!

(or more likely the guy writing the article knows fuck all about what he's talking about)

Reply

0

-

+

Eurogamer replied:

@smelly We've tweaked that line to clarify. It was meant to highlight the DX10 support, not indicate it is limited to it. Appreciate this wasn't clear. Apologies for the confusion.

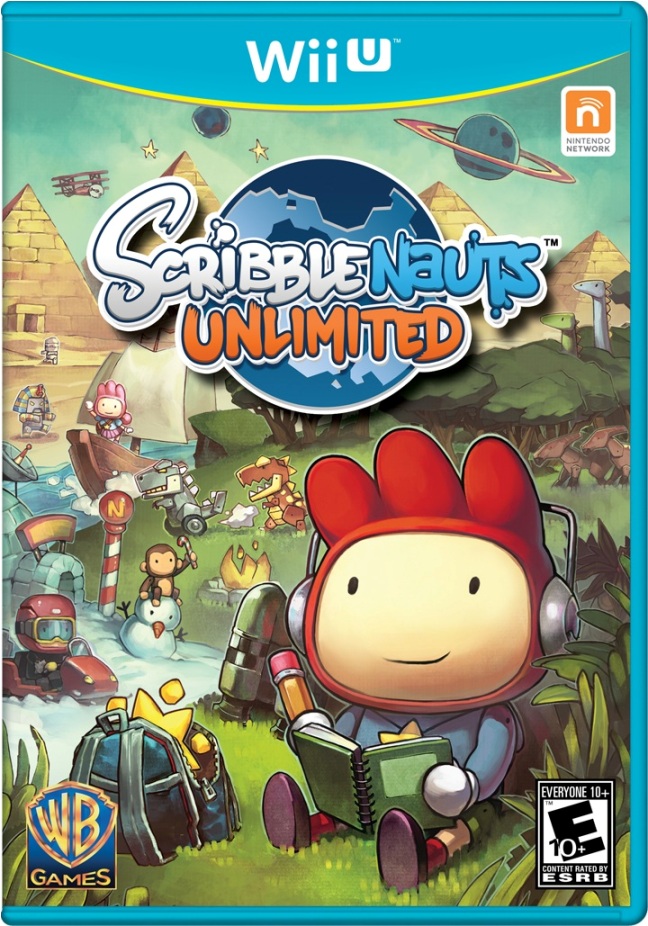

Remember, Crytek said that they were not making a Wii U game but that 3rd parties were using Cryengine 3 on Wii U and that it ran "beautifully?"

Well we all expected it was this and the ESRB has confirmed it, Sniper Ghost Warrior 2. Is this series any good?

This is the tech trailer

On the CPU the article is talking CPU clockspeeds again. From what I have read, clockspeeds are not a great way of determining the performance of a CPU for instance:

gamingeek said:I found this interesting, clock speeds are really misleading:

A notable difference from POWER6 is that the POWER7 executes instructions out-of-order instead of in-order. Despite the decrease in maximum frequency compared to POWER6 (4.25 GHz vs 5.0 GHz), each core has higher performance than the POWER6, while having up to 4 times the number of cores.

But also reading GAF it seems these developers are not used to OoO Out of Order Processors as they have been making In Order CPU games for the current gen consoles for so long. These articles and quotes do not weigh the benefit of the Wii U CPU being an Out of Order CPU

Wikipedia said:The key concept of OoO processing is to allow the processor to avoid a class of stalls that occur when the data needed to perform an operation are unavailable. In the outline above, the OoO processor avoids the stall that occurs in step (2) of the in-order processor when the instruction is not completely ready to be processed due to missing data.

OoO processors fill these "slots" in time with other instructions that are ready, then re-order the results at the end to make it appear that the instructions were processed as normal. The way the instructions are ordered in the original computer code is known as program order, in the processor they are handled in data order, the order in which the data, operands, become available in the processor's registers. Fairly complex circuitry is needed to convert from one ordering to the other and maintain a logical ordering of the output; the processor itself runs the instructions in seemingly random order.

The benefit of OoO processing grows as the instruction pipeline deepens and the speed difference between main memory (or cache memory) and the processor widens. On modern machines, the processor runs many times faster than the memory, so during the time an in-order processor spends waiting for data to arrive, it could have processed a large number of instructions.

So in laymans terms, in order processors waste a lot ot time processing instructions and stalls where out of order deals with everything in a more efficient and logical way, no wastage. Potentially, despite a lower clock speed, simple programming in OoO language can make your CPU run just as well as a higher clocked In Order CPU.

Voila.

That whole "weak CPU" thing smells like bullshit to me. Of course it'll be clocked lower, as high clock speeds are a surefire way to burn way too much power and produce way too much heat for such a small device. But high clock speeds are also tied to longer pipelines - at the end of the day, there are routines that would be faster on a higher clocked, in-order CPU with a longer pipeline, and there are things that perform better on a lower clocked, out-of-order CPU with a shorter pipeline. We had the same thing in the PC space years ago with the Pentium 4.

So yes, the Wii U CPU is clocked lower and has the same number of cores as Xenon, probably the same number of SIMD units as well. Some floating point number crunching routines will be faster on Xbox360, and developers relying on those will run into problems with ports. But if the code needs to kill the pipeline every few cycles, the Wii U CPU would probably run circles around Xenon.

If Wii U is 3 Wiis duct taped together you could say that it's 6 Gamecubes taped together. Now if we could only work out how many NES's that is.